Hive vs Spark vs Presto: SQL Performance Benchmarking

In my previous post, we went over the qualitative comparisons between Hive, Spark and Presto. In this post, we will do a more detailed analysis, by virtue of a series of performance benchmarking tests on these three query engines.

Benchmarking Data Set

For this benchmarking, we have two tables. Some of the key points of the setup are:

- All the query engines are using the Hive metastore for table definitions as Presto and Spark both natively support Hive tables

- All the tables are external Hive tables with data stored in S3

- All the tables are using Parquet and ORC as a storage format

Tables:

1. product_sales: It has ~6 billion records

2. product_item: It has ~589k records

Hardware

Tests were done on the following EMR cluster configurations,

EMR Version: 5.8

Spark: 2.2.0

Hive: 2.3.0

Presto: 0.170

Nodes:

Master Node: 1x r4.16xlarge

Task nodes: 8x r4.8xlarge

Query Types

There are three types of queries which were tested,

- Simple query:

select * from product_item limit 10;

2. Medium query: In this query, two tables were joined and where clauses were put to filter data based on date partitions

select p.product_id, cast('2017-07-31' as date) as sales_month, sum(p.net_ordered_product_sales ) as sales_value

from product_sales p where

p.product_id in (select distinct product_id from product_items)

and p.activity_day between cast('2017-07-01' as date) and cast('2017-07-31' as date)

group by p.product_id;

from product_sales p where

p.product_id in (select distinct product_id from product_items)

and p.activity_day between cast('2017-07-01' as date) and cast('2017-07-31' as date)

group by p.product_id;

3. Complex query: In this query, data is being aggregated after the joins. Also, to stretch the volume of data, no date filters are being used

select p.product_id, sum(p.net_ordered_product_sales ) as sales_value

from product_sales p where

p.product_id in (select distinct product_id from product_items )

group by p.product_id;

from product_sales p where

p.product_id in (select distinct product_id from product_items )

group by p.product_id;

Test Methodology

1. Power run: When the only thing running on the EMR cluster was this query. This was done to evaluate absolute performance with no resource contention of any sort.

2. Concurrent runs: To test impact of concurrent loads on the cluster, series of tests were done with concurrency factors of 10, 20, 30, 40 and 50. The set of concurrent queries were distributed evenly among the three query types (e.g. for the concurrency factor of 50, 17 instances of Query1, 17 instances of Query2 and 16 instances of Query3 were executed simultaneously).

3. Multiple Concurrent runs : We tested the impact of concurrent load by firing 10 concurrent queries and then waited for 2 minutes and then fired 20 concurrent queries and then fired 30 concurrent queries after a delay of 2 minutes.

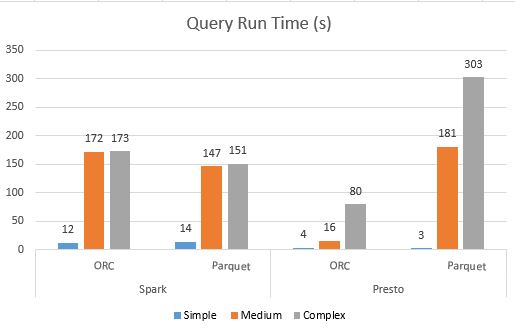

Results Summary

We did the same tests on a Redshift cluster as well and it performed better that all the other options for low concurrency tests. On the other hand, we could clearly see the effects of increasing concurrency in Redshift, while Presto and Spark scaled much more linearly.

- Hive remained the slowest competitor for most executions while the fight was much closer between Presto and Spark. That's the reason we did not finish all the tests with Hive.

- Presto with ORC format excelled for smaller and medium queries while Spark performed increasingly better as the query complexity increased.

- All engines demonstrate consistent query performance degradation under concurrent workloads.

- There were no failures for any of the engines up to 20 concurrent queries. For larger number of concurrent queries, we had to tweak some configs for each of the engines.

This comment has been removed by the author.

ReplyDelete